Forschung

Das vorrangige Forschungsziel des Lehrstuhls für Autonome Systeme und Mechatronik ist es, technische Systeme zu entwickeln, die ihre Nutzenden funktional unterstützen und ihnen ein positives Nutzungserlebnis bieten. Um die Nutzendenakzeptanz gezielt zu fördern, werden menschliche Faktoren und technische Anforderungen gleichermaßen berücksichtigt. Unser Forschungsansatz ist in der untenstehenden Abbildung skizziert und basiert auf einer Mischung von Ansätzen aus den Ingenieur- und Humanwissenschaften. Wir demonstrieren unsere Erkenntnisse an tragbaren Systemen wie Prothesen oder Exoskeletten, kognitiven Systemen wie kollaborativen oder humanoiden Robotern und allgemeinen Anwendungen mit enger Mensch-Roboter-Interaktion.

Die zentralen Forschungsfragen des Lehrstuhls für Autonome Systeme und Mechatronik sind:

- Welche Design-, Steuerungs- und Schnittstellenimplementierungen unterstützen die Mensch-Maschine-Interaktion?

- Wie beeinflussen menschliche Faktoren die Mensch-Maschine-Interaktion und wie können sie systematisch beim Entwurf berücksichtigt werden?

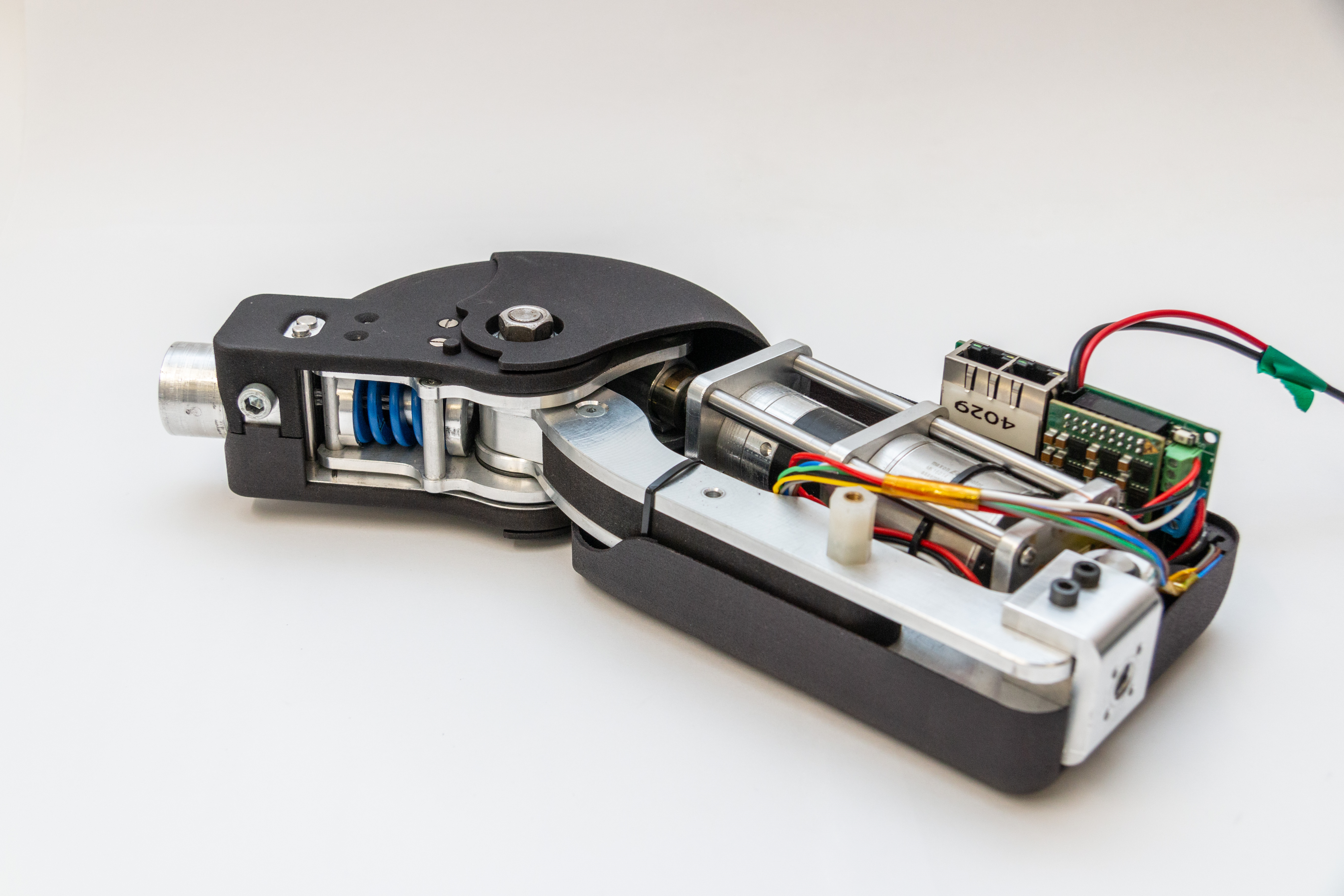

Komponenten und Regelung |

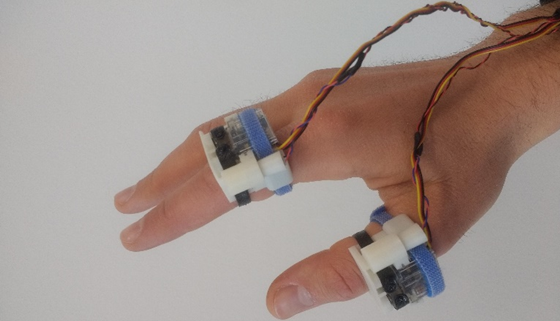

Schnittstellen und Interaktion |

|

Tragbare Systeme |

|

|

Kognitive Systeme |

|

|