Knowledge Integration with Retrieval-Augmented Generation (RAG) from Mixed Data Sources

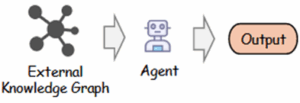

Want to make AI smarter and more reliable? Join our project on Retrieval-Augmented Generation (RAG)! Large Language Models (LLMs) are impressive, but smaller ones often “hallucinate” — they guess instead of knowing. Why? Because they don’t have access to fresh, specialized, or local information. RAG helps by allowing LLMs to look things up across different formats, including structured data, unstructured text, websites, and local files. This project explores how to combine these heterogeneous sources into a unified RAG pipeline. The goal is to improve the quality of retrieval and the accuracy of generated responses, making LLMs more useful for complex, mixed-source information needs.

Want to make AI smarter and more reliable? Join our project on Retrieval-Augmented Generation (RAG)! Large Language Models (LLMs) are impressive, but smaller ones often “hallucinate” — they guess instead of knowing. Why? Because they don’t have access to fresh, specialized, or local information. RAG helps by allowing LLMs to look things up across different formats, including structured data, unstructured text, websites, and local files. This project explores how to combine these heterogeneous sources into a unified RAG pipeline. The goal is to improve the quality of retrieval and the accuracy of generated responses, making LLMs more useful for complex, mixed-source information needs.

We are looking for a student with a strong interest in LLMs. Advanced programming skills are not required — the emphasis will be on scientific reading, critical thinking, and analysis.

Your tasks are as follows:

- Review literature on RAG techniques for LLMs.

- Build a chatbot using both online and local data sources, with reliable source tracking.

- Evaluate performance and compare to benchmarks

- Analyze and discuss results.

More information can be found here

Department of Electrical-Electronic-Communication EngineeringYongxu Ren, M.Sc.

Chair of Autonomous Systems and Mechatronics